There is Exogeneity, and Then There is Strict Exogeneity

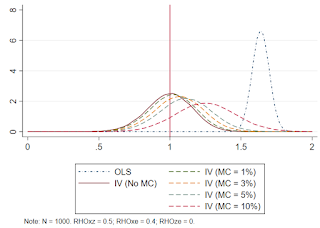

Panel data has become quite abundant. As a result, fixed effects models have become prevalent in applied research. But, this week I handled a paper at one of the journals for which I am on the editorial board and was reminded of a mistake that I have seen all too frequently over the years. Most researchers are probably aware of the issue I am highlighting, but indulge me. In a cross-sectional regression model, we have y_i = a + b*x_i + e_i OLS is unbiased if x is exogenous , which requires that Cov(x_i,e_i) = 0. In other words, the covariates need to be uncorrelated with the error term from the same time period. In contrast, in a panel regression model with individual effects, we have y_it = a_i + b*x_it + e_it If we wish to allow for the possibility that Cov(a_i,x_it) differs from zero, then a_i is a "fixed" effect instead of a "random" effect. To understand what we need to assume to obtain unbiased estimates of b, we need to understand how the model i